From Uncertainty to Insight

Master Complex Systems with

Causal Bayesian Networks

Make data-driven safety decisions with transparency, traceability, and confidence. We turn uncertainty and risk quantification into explainable safety insights that support ISO 21448 (SOTIF), Functional Safety, and autonomous system validation.

Quantification by Statistical Methods,

Causal Models & Monte-Carlo-Simulation

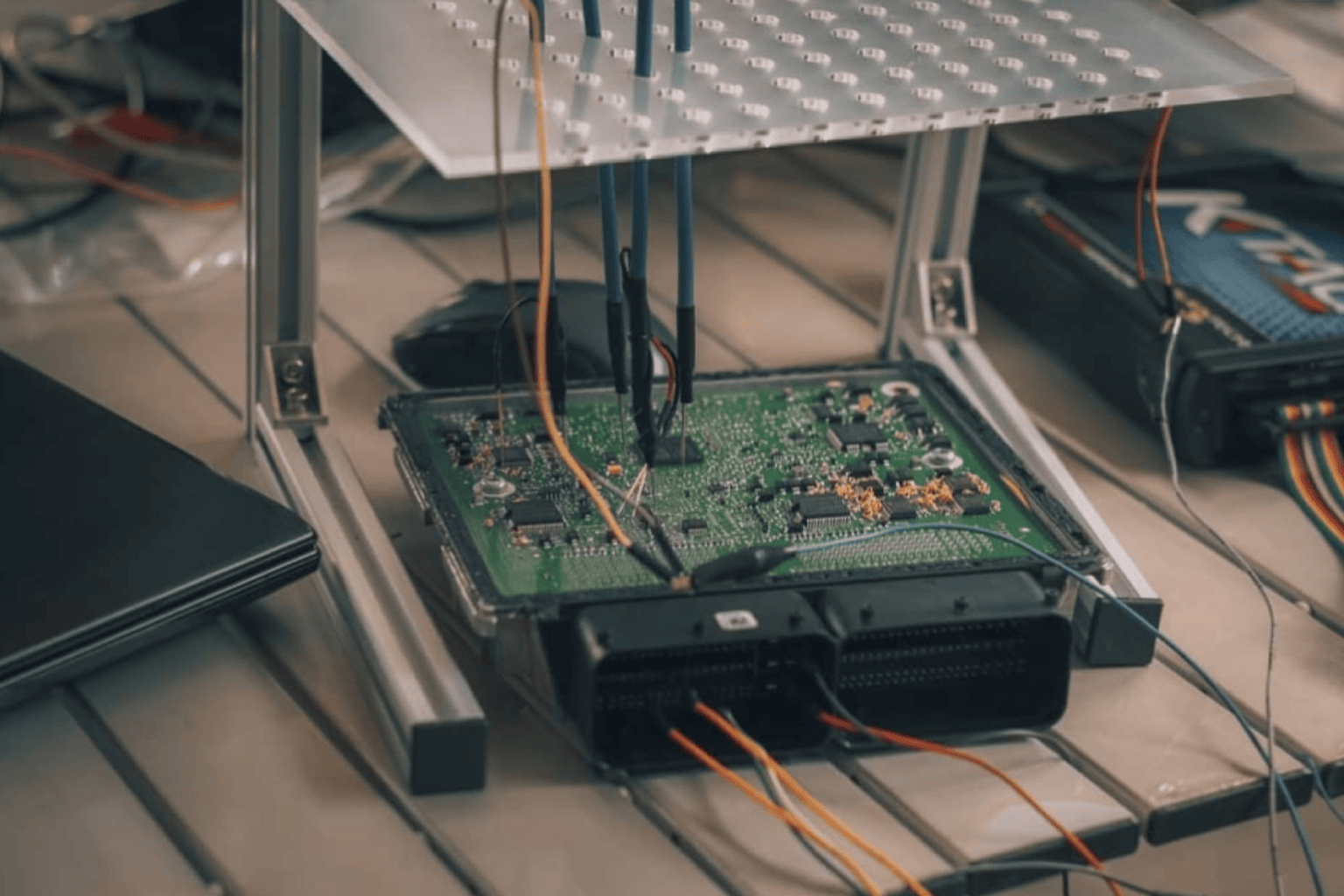

exida's, safety engineers provide a structured evaluation workflow based on models, that are easy to understand by FuSa and SOTIF practitioners and assessors. You bring the data and your tolerable risk targets – we will deliver quantified results and explainable reasoning your assessors can rely on.

Benefits

Models that fit your data

Based on your real-world data collection, we create mathematical models tailored for safety evaluation, uncertainty quantification, and SOTIF analysis.

A systematic approach to demonstrable safety evidence

Our workflow combines statistical methods, simulations, Bayesian inference, and structured analysis to deliver reliable evidence for your targeted tolerable risk.

Explainable statistics to understand system limitations

Autonomous systems must anticipate what comes next.

We connect quantitative risk assessment, Bayesian reasoning, and performance limits to give you explainable statistical insights, transparent safety arguments, early detection of potential triggers, and measurable evidence for ISO 21448

You gain clarity where systems face uncertainty.

Upgrading Fault Trees to address today's complexity.

Quantify what others can only guess.

With expertise and a framework built for explainable performance.

Whether you’re exploring Bayesian Networks, shaping your SOTIF strategy, or advancing Functional Safety (FuSa) – we will meet you where complexity begins.

With deep expertise in mathematics, statistics, and safety engineering, we turn uncertainty into confidence. From probabilistic modeling to safety analysis, training, engineering, and certification – we provide the clarity, tools, and evidence needed to make intelligent systems truly safe.

-

Startups

Turning innovation into explainable safetyInnovation moves fast — but safety cannot be an afterthought.

For AI-driven startups working with incomplete data and rapid prototyping, Bayesian reasoning enables structured, scalable safety foundations that match your pace.

We help you apply Bayesian Networks early in development, quantify uncertainty in concepts and prototypes, create explainable, traceable arguments for ISO 21448 / ISO 26262, and prepare for future OEM collaboration and assessments.This email address is being protected from spambots. You need JavaScript enabled to view it. -

Automotive Suppliers

Building safety in every layerSuppliers must demonstrate clear, quantifiable safety evidence, even without full-system validation responsibility. We help you translate system-level safety goals into component-level Bayesian models and structured arguments aligned with ISO 26262 and SOTIF.

You get deliverables that are technically sound, traceable to OEM safety goals, and ready for a seamless integration into higher-level safety cases.This email address is being protected from spambots. You need JavaScript enabled to view it. -

Innovation Units in OEMs

From uncertainty to confidence - by designSafety in autonomous systems is no longer just about testing — it’s about understanding system behavior. We combine Bayesian reasoning, SOTIF insights, and FuSa structure to help you explain complex AI and autonomy behavior, quantify rare or safety-critical events, justify tolerable risk values with data-driven arguments, and meet stringent OEM validation requirements.

With transparent evidence and quantitative reasoning, you can prove what others can only assume.This email address is being protected from spambots. You need JavaScript enabled to view it.

Common challenges when quantifying uncertainties

Quantifying uncertainty of Autonomous Driving Systems means facing the unknown - incomplete data, unexpected scenarios, and system behaviors that defy prediction.

How to deal with incomplete data

Real-world data is never perfect — but safety decisions can’t wait.

Bayesian Networks combine available data with expert knowledge to infer missing information and uncover hidden dependencies.

The result: transparent, explainable insights, even under uncertainty.

How to face the "unknown"

Autonomous systems will always encounter unexpected situations.

We help you identify, model, and manage these unknowns — turning uncertainty into measurable, explainable safety.

Because true safety means being prepared for what cannot be predicted.

Complex and unexpected scenarios

Safety-critical events are rare — and cannot be fully tested on real roads.

By modeling interactions and predicting rare events, Bayesian Networks help you quantify system behavior beyond what testing alone can show.

This creates confidence where experience falls short.

Why a systematic approach matters

A safety argument is only as strong as its foundations.

By documenting your assumptions, decisions, and data context systematically, we create a traceable, scientifically sound framework for SOTIF and Functional Safety.

Curious?

Read our publications!

"Explainable Statistical Evaluation and Enhancement of Automated Driving System Safety Architectures"

Can we trust deep neural networks in automated driving safety?

DNNs power perception and control in ADS, but their complexity makes functional safety and SOTIF assessments nearly impossible. Our paper introduces an explainable workflow using Bayesian networks and physical models to identify dominant risk factors and evaluate tolerable risk targets. Discover why static ODD definitions fail—and how a dynamic protection layer can make the difference.

"Safety integrity framework for automated driving"

How do you prove an automated driving system is truly safe?

BMW’s first SAE Level 3 ADS didn’t just meet safety standards—it set a new benchmark. This paper reveals the comprehensive safety framework behind its development and regulatory approval, combining systems engineering, Bayesian analysis, and stochastic simulation to achieve a Positive Risk Balance. Learn how uncertainty quantification, redundancy, and advanced analytics were integrated into the V-Model for rigorous, transparent safety assurance.

"A Statistical View on Automated Driving System Safety Architectures"

How safe is “safe enough” for automated driving?

Meeting tolerable risk targets for SAE Level 3+ AD functions is harder than it seems. Our latest research reveals why current sense-plan-act architectures—and even two-channel redundant systems—may fall short. Explore the role of sensor correlation, common-cause failures, and why adding a warning subsystem could change the game.